Hey, I'm Om

I'm a CS student at UT Austin, passionate about building solutions to real problems.

Here are some notes to myself.

I'm a CS student at UT Austin, passionate about building solutions to real problems.

Here are some notes to myself.

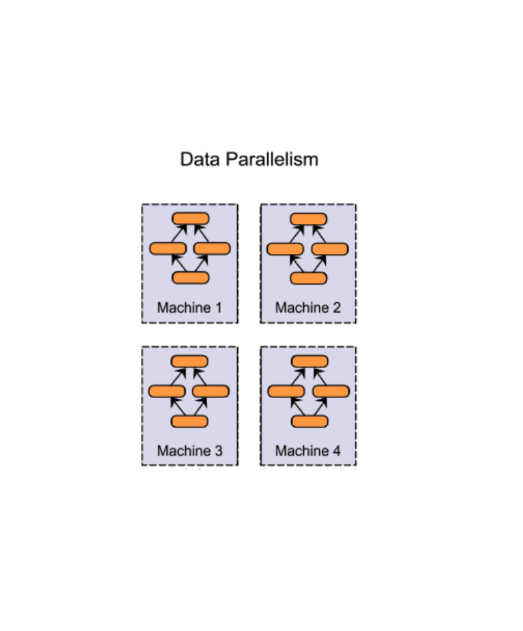

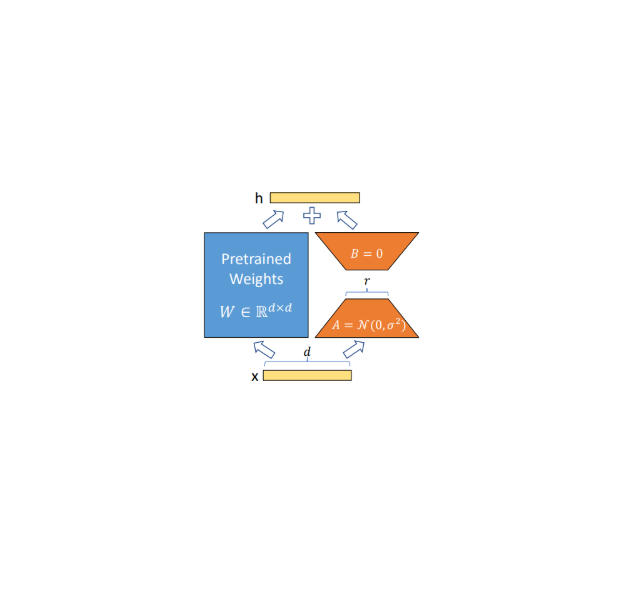

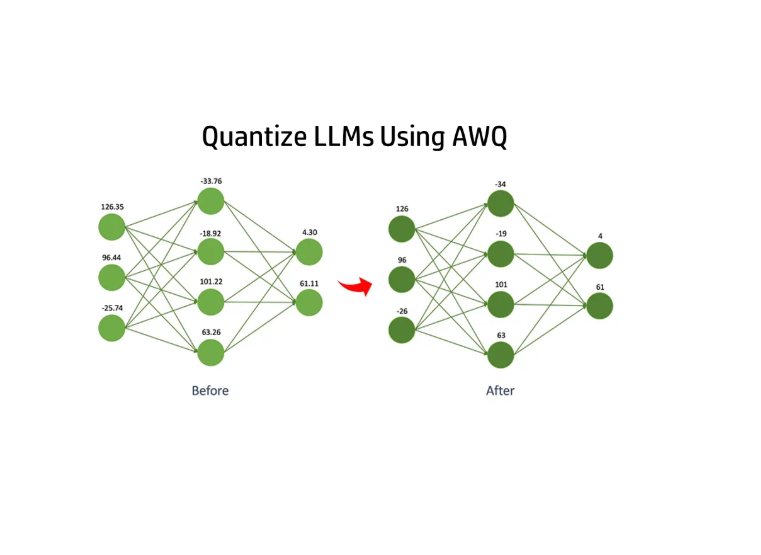

Pre Training a 10M GPT from scratch on 300K tokens on an A100 GPU.

A Git-like chat session management tool for coding agents. Implementation available as an open-source MCP server, with a modified mini-swe-agent integration for benchmarking.

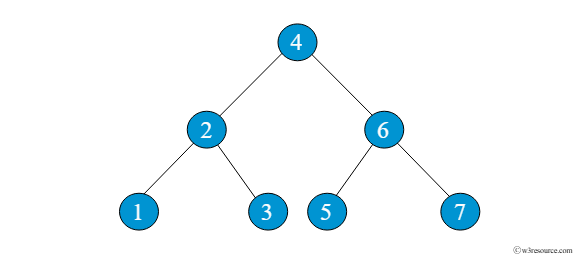

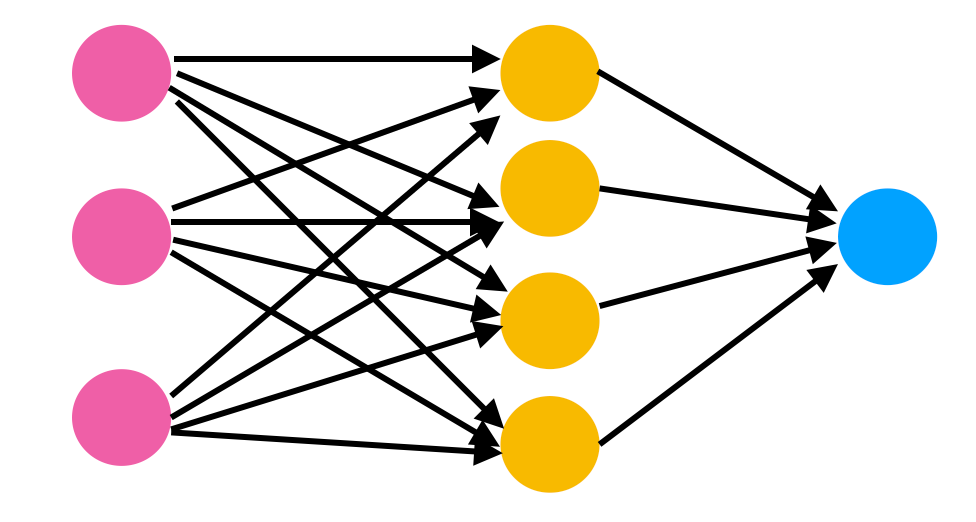

Developed an Autograd engine implementing backpropagation (reverse-mode autodiff) over dynamically built DAGs forscalar operations, and built a PyTorch-style neural network library atop Micrograd